Your customer calls your business and hears a voice that sounds almost human. Something feels wrong. They can’t pinpoint it, but the interaction makes them uncomfortable.

This phenomenon plagues AI receptionists across industries. The uncanny valley in AI voice creates customer discomfort when synthetic voices sound too human but not quite right. Brand managers face a critical challenge: how do you deploy voice AI without creeping out customers?

Voice psychology directly impacts customer trust, satisfaction, and brand perception. Hospitality and luxury brands struggle with this balance between technological efficiency and human warmth.

What Is the Uncanny Valley?

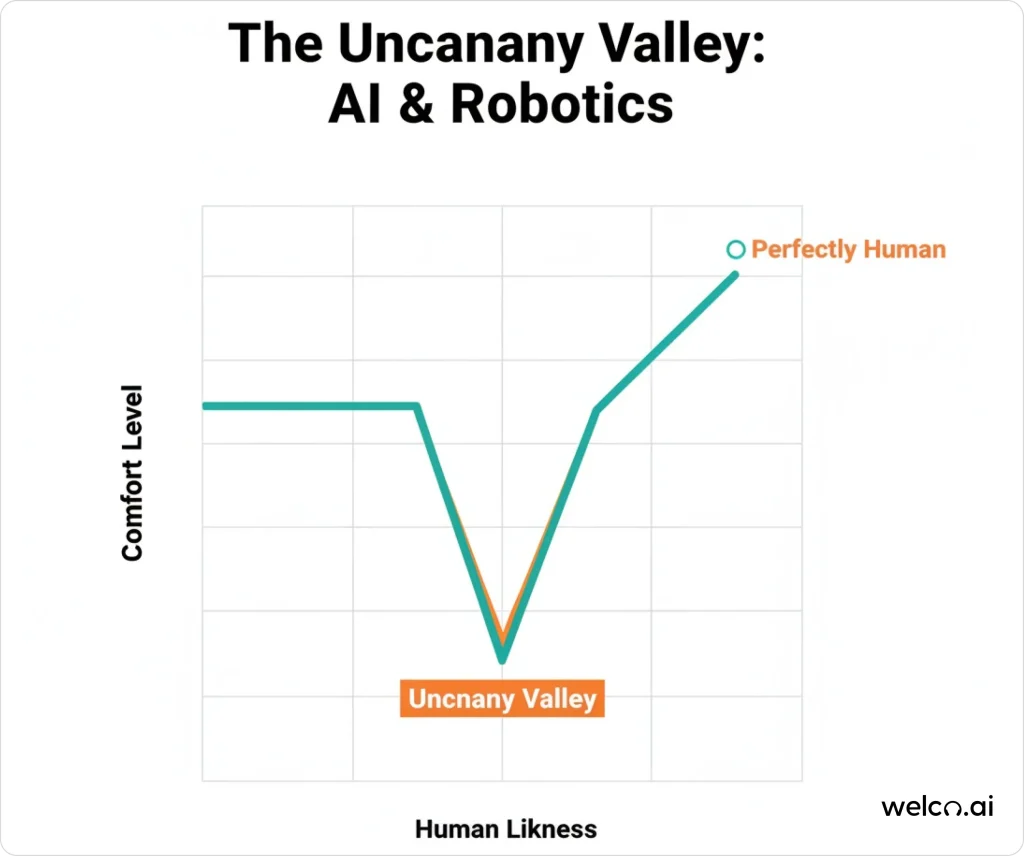

The uncanny valley describes the psychological discomfort people feel when encountering almost-human artificial entities. This phenomenon affects AI voices that sound too human but lack genuine human qualities. Understanding its origins and vocal applications helps businesses avoid customer discomfort.

Origins and definition of the Uncanny Valley

Roboticist Masahiro Mori introduced the uncanny valley theory in 1970. He discovered that human affinity for robots increases as they become more humanoid. However, this positive response drops dramatically when robots appear almost human but not quite perfect.

The valley represents the dip in comfort levels. People feel uneasy around entities that seem almost human but contain subtle imperfections. This creates cognitive dissonance and emotional disconnect.

The uncanny valley effect extends beyond visual appearance to voice interactions. AI voices trigger the same psychological response when they sound too human yet lack genuine human qualities.

Beyond visuals: the vocal uncanny valley

Voice AI creates unique uncanny valley experiences. Unlike visual robots, AI receptionists interact through speech alone. Customers form instant judgments about warmth, competence, and trustworthiness from voice cues.

AI receptionists often fall into this valley when they sound almost human but miss crucial vocal nuances. The near-human appearance can make people feel uneasy, disrupting natural conversation flow.

Modern voice assistants like Alexa and Siri navigate this challenge differently. Some embrace artificial characteristics while others pursue hyper-realistic human imitation.

How human perception responds to voice AI

Human brains evolved to detect authentic voice patterns. We process thousands of vocal micro-expressions within milliseconds of hearing someone speak.

Our cognitive systems categorize voices as human or artificial for survival purposes. When AI voices blur these categories, they trigger discomfort and suspicion.

Brain imaging studies reveal that uncanny voices activate conflict areas in the parietal cortex. The visual cortex and motor cortex struggle to process a mismatched human-like appearance with robotic behavior.

Voice Psychology & Customer Comfort

Human brains process voice characteristics within milliseconds, creating instant judgments about trustworthiness and authenticity.

AI voices that trigger psychological discomfort can damage customer relationships before conversations begin. Understanding these cognitive processes helps businesses design better voice interactions.

How human brains judge voices

Voice perception happens faster than conscious thought. Within 500 milliseconds, customers evaluate trustworthiness, competence, and emotional state from vocal cues.

Pitch variations signal emotional authenticity. Rhythm patterns indicate natural speech flow. Breath sounds and micro-pauses convey human presence and attention.

Neural pathways dedicated to voice processing expect specific human characteristics, including vocal tract resonance, breathing patterns, and emotional modulation.

Triggers for discomfort: cognitive dissonance and emotional disconnect

Cognitive dissonance occurs when AI voices conflict with sensory information. Humans are wired to classify everything as a survival mechanism. Voices that seem human but are artificial create uncomfortable psychological tension.

Customers report feeling “lied to” by AI voices that sound too human. This emotional disconnect damages trust before conversations even begin. Subtle mismatches trigger the strongest reactions.

Perfect pronunciation without natural hesitations feels unnatural. Consistent emotional tone across all interactions seems robotic.

Missing nuance: why AI voices sound “off”

Human speech contains imperfections that convey authenticity. Natural speakers pause, stammer, and inject emotion in subtle ways. These imperfections aren’t errors; they are integral to genuine communication.

AI voices often lack breath sounds, natural hesitations, and pitch variations. This “perfect” speech creates sterile interactions that feel controlled and untrustworthy.

Missing emotional context compounds the problem. AI voices struggle to match tone with conversation content or customer emotional state.

Real reactions from customers

Customer service interactions reveal common uncanny valley reactions. Users describe feeling “manipulated” or “creeped out” by overly human-like AI voices, especially during sensitive conversations.

Hospitality customers expect warmth and personal attention. AI voices that sound human but lack empathy create negative first impressions.

Studies show customers prefer clearly artificial voices over almost-human ones. Transparency about AI nature prevents disappointment and builds appropriate expectations.

Pain Points: What Makes AI Voices Creepy?

Several technical and psychological factors push AI voices into the uncanny valley, creating customer discomfort and trust issues. Almost-perfect replication, response delays, and emotional blindness are primary triggers. Identifying these pain points helps businesses avoid common voice design mistakes.

The danger of almost-perfect replication

The closer AI gets to human-like speech, the more unsettling it becomes when imperfections appear. Almost-perfect replication triggers stronger negative responses than clearly artificial voices.

Luxury brands face particular challenges. High-end customers expect sophisticated interactions but feel deceived by AI voices that seem deceptively human.

British banks and fashion brands have faced severe backlash when they launched human-like AI avatars. They deleted social media clips of “digital humans” due to negative public reactions.

Latency and emotional recognition

Response delays disrupt natural conversation flow. When AI receptionists take too long to respond, interactions feel awkward and unnatural.

Quick response times are crucial for smooth conversations. AI systems struggle with emotional recognition. They cannot detect customer frustration, excitement, or confusion from vocal cues.

Emotional blindness compounds uncanny valley effects. AI voices saying “I understand you’re upset” without genuine empathy recognition makes customers feel patronized.

Voice modulation errors and personality inconsistency

Robotic inflections reveal AI limitations. Monotone delivery or inappropriate emotional responses break the illusion of human interaction. Personality inconsistencies across touchpoints damage brand coherence.

AI voices that sound different between phone and chat interactions confuse customers. Technical glitches like audio delays, distortion, or processing errors immediately expose the artificial nature fast.

Brand Perception & Trust: Why Voice Matters

Voice characteristics directly impact how customers perceive brand quality, trustworthiness, and professionalism within seconds of interaction. Poor AI voice design can damage brand reputation and customer relationships permanently. Premium brands face especially high stakes when deploying voice AI systems.

How voice shapes brand identity instantly

Voice carries layers of meaning through tone, pitch, rhythm, and emotion. Customers form judgments about brand personality within milliseconds of hearing an AI voice.

Trust assessments happen unconsciously during initial voice contact. Warm, consistent voices build confidence while artificial-sounding ones create skepticism. Premium brands risk damage when AI voices don’t match expected service quality. Voice becomes the primary brand touchpoint in phone interactions.

Hospitality and luxury: the high-expectation problem

Luxury hotels and high-end restaurants face unique voice AI challenges. Customers pay premium prices expecting personalized, attentive service.

AI voices that sound scripted or impersonal contradict luxury positioning. Guests notice immediately when interactions lack genuine warmth or flexibility.

Failed AI voice implementations have caused lost bookings, guest complaints, and franchise reputation risks in hospitality sectors.

Functional impact: measurements and metrics

Voice quality directly affects conversion rates, customer satisfaction scores, and retention metrics. Poor AI voice experiences increase call abandonment and negative reviews.

Trust metrics plummet when customers feel deceived by AI interactions. Transparency about artificial nature actually improves satisfaction scores. Brand differentiation suffers when AI voices sound generic or robotic compared to competitors with better voice design.

Crafting Natural-Sounding AI Voices

Creating comfortable AI voices requires balancing technological capability with human psychological needs and brand personality alignment.

Strategic imperfection and transparent design prevent uncanny valley effects while maintaining professional service quality. Successful voice design combines emotional intelligence with clear AI disclosure.

Designing for customer comfort: Welco’s principles

Welco prioritizes customer psychological comfort over technical perfection. Our voice selection methodology focuses on emotional intelligence and conversational dynamics.

Human-in-the-loop feedback guides voice tuning decisions. Real customer interactions inform adjustments to tone, pacing, and personality traits.

Contextual adaptation allows voices to match conversation topics and customer emotional states. This creates more natural, appropriate responses.

Achieving voice presence

Voice presence encompasses emotional warmth and authentic engagement that goes beyond basic speech delivery. Welco’s engineering process combines emotional modeling with real customer feedback to create genuinely engaging AI personalities. Consistent character traits across interactions build trust while contextual adaptation maintains conversation relevance.

Real rapport building requires consistent personality traits across all interactions. Our AI maintains character coherence while adapting to specific contexts.

Embracing imperfection: making voices relatable

Perfect AI voices feel sterile and untrustworthy. Adding natural imperfections like pauses, breathing sounds, and subtle hesitations increases customer comfort and trust.

Welco incorporates carefully crafted imperfections that mimic authentic human speech patterns. These include natural rhythm variations and contextual emotional responses.

Strategic imperfection prevents AI from entering the uncanny valley. Voices that acknowledge limitations feel more honest and approachable than those claiming perfection.

Transparency and stylization vs. hyper-realism

Clear AI disclosure prevents customer deception and builds appropriate expectations. Welco voices are designed to sound professional and helpful without pretending to be human.

Stylized voices work better than hyper-realistic ones for most business applications. Customers appreciate honesty about AI capabilities and limitations.

Naming strategies matter too. Human-sounding names like “Sarah” create higher expectations than technology-focused names that signal AI nature clearly.

Matching brand and cultural expectations

Welco customizes voice characteristics to align with brand values and customer demographics. Premium brands receive sophisticated, warm voices while casual brands get friendly, approachable tones.

Cultural preferences influence voice design choices. Regional accents, communication styles, and cultural norms shape customer comfort levels.

Global businesses need consistent yet locally adapted voice personalities. Welco balances brand coherence with cultural sensitivity across markets.

Avoiding Uncanny Valley: Practical Frameworks

Implementing successful AI voice systems requires systematic planning, testing, and continuous improvement based on customer feedback. Practical checklists and monitoring strategies help businesses maintain customer comfort while achieving operational efficiency. Success stories demonstrate measurable improvements in customer satisfaction and business metrics.

Voice selection checklist for brands

To avoid the uncanny valley phenomenon, select a voice that aligns with customer needs, brand identity, and technical performance, then refine it through feedback

Customer analysis: Define target audience demographics, preferences, and communication expectations. Map the emotional states customers experience during typical interactions.

Brand alignment: Ensure AI voice characteristics match brand personality and values. Test voice consistency across all customer touchpoints and channels.

Technical testing: Evaluate response times, audio quality, and conversation flow. Monitor failure recovery and error handling capabilities.

Feedback integration: Establish customer feedback collection and analysis processes. Create continuous improvement cycles based on real interaction data.

How to monitor and improve AI voice systems

To improve AI voice-based receptionists, continuously optimize AI voice by tracking metrics, analyzing conversations, and testing adjustments.

Track customer satisfaction metrics specifically related to voice interactions.

Monitor completion rates, escalation requests, and sentiment analysis from calls.

Analyze conversation transcripts for patterns in customer confusion or frustration. Identify specific voice characteristics that correlate with positive or negative outcomes.

Implement A/B testing for voice modifications. Compare customer responses to different personality traits, speaking speeds, and emotional expressions.

How Welco Leads the Way

Voice AI success requires balancing technological capability with human psychological comfort. The uncanny valley threatens customer trust when AI voices try too hard to seem human.

Welco’s approach prioritizes customer comfort through strategic imperfection and transparent AI design. Our voices build genuine rapport without deceptive human mimicry.

Brands investing in thoughtful voice design create competitive advantages through improved customer experience. The future belongs to companies that understand voice psychology and customer emotional needs.

Ready to avoid the uncanny valley with your AI voice strategy? Contact Welco today to discover how our psychologically-optimized voice solutions can transform your customer interactions while maintaining an authentic brand personality.

Frequently Asked Questions

Why do some AI voices sound creepy or unsettling to customers?

AI voices often trigger discomfort when they fall into the “uncanny valley”—the zone where a voice is almost human but subtle flaws (like unnatural intonation, pacing, or emotional expression) make it feel off or insincere. This mismatch causes cognitive dissonance, making listeners uneasy even if the speech seems technically advanced.

How can brands avoid the uncanny valley in their AI voice designs?

Brands should avoid trying to perfectly mimic human voices. Instead, they can focus on clear, functional, and engaging voices with well-defined tonal qualities, natural imperfections, and transparent disclosure that the speaker is AI. Voice design guidelines recommend incorporating enough nuance—like pauses, varied prosody, and conversational timing—without overreaching for hyper-realism.

Can AI voice assistants recognize emotions or tailor responses accordingly?

Current AI voice assistants can detect basic sentiment and respond to some contextual cues, but true emotional intelligence and nuanced empathy are limited. Developers are actively researching ways to help voice AI better identify and respond to user emotions through speech patterns, but striking the right balance without sounding insincere or breaching ethical boundaries remains a major challenge.

What are the main benefits and limitations of using AI for brand voice?

AI enables fast, scalable, and consistent production of branded audio and written content across platforms. It personalizes interactions and speeds up content delivery, but it risks sounding generic or inconsistent without a clearly defined brand voice strategy and human oversight. Brand teams must guide AI tools with clear style guides and regular reviews to maintain authenticity and avoid misalignment.