The Call That Changed My Perspective

Three years ago, I was deeply skeptical about AI security solutionshandling anything security-related.

The early systems were clunky, vendors overpromised, and most implementations felt like expensive experiments that barely worked better than a well-trained receptionist.

That changed with a 2 AM call from Dr. James Patterson at Westside Family Practice. His night receptionist had accidentally disclosed detailed patient information to someone claiming to be family—a potential $50,000 HIPAA violation that could destroy his practice.

“I can’t afford another mistake like this,” he said. “What are my options?”

Eight months after implementing an AI-powered security system, his practice hasn’t had a single compliance incident. More importantly, their last regulatory audit took two hours instead of two days because every interaction was perfectly documented.

But here’s what I learned: it wasn’t the AI threat detectionthat made the difference—it was the forced standardization of security protocols through intelligent threat detection

Understanding how AI receptionist integration capabilities transform business operations reveals why proper security implementation requires a comprehensive approach to connecting all your systems.

What Actually Drives Security Success

After analyzing dozens of implementations, I’ve discovered that most failures aren’t technology failures—they’re planning and expectation failures in AI governance.

Consistency Beats Intelligence

The biggest win isn’t advanced machine learning security—it’s having security protocols followed exactly the same way, every single time through automated security systems.

I’ve watched reception staff juggle phone calls, visitors, and deliveries simultaneously. Even excellent employees slip up occasionally. AI cybersecurity doesn’t get distracted, tired, or make exceptions because “they seemed legitimate.”

Documentation That Actually Protects You

Every call gets logged automatically with timestamps, verification steps, and outcomes through AI security platforms. When compliance audits happen, you have organized records instead of hunting through handwritten notes.

Real Impact: One legal client told me their malpractice insurance premium dropped $3,200 annually after demonstrating consistent client confidentiality protocols through AI documentation and AI compliance measures.

Smart Escalation (When It Works)

Modern intelligent security systems recognize when situations need human judgment. Dr. Patterson’s practice escalates about 15% of calls to staff—exactly the complex scenarios that should get human attention.

The catch: This only works when the escalation rules are properly configured, which took us three rounds of testing to get right.

For more insights on how these systems handle complex interactions, explore natural language processing in AI systems.

The foundation of effective AI phone system security security lies in understanding voice recognition technology breakthroughs that have made these conversations feel completely natural while maintaining enterprise phone security protocols.

Real Implementation Results (Including the Struggles)

Legal Firm: Information Protection Success… Eventually

Before: 2-3 accidental information disclosures monthly

After 6 months: No major incidents, 90% better documentation

Hidden reality: Took 8 weeks of configuration because their client authorization hierarchies were more complex than anyone anticipated.

What went wrong initially: The AI security solutions kept escalating routine requests because we hadn’t properly mapped all the attorney-client relationship scenarios. Staff got frustrated and started overriding the system.

What finally worked: Custom programming for joint clients and detailed escalation procedures for opposing counsel requests through legal AI compliance.

Healthcare Practice: HIPAA Compliance Challenges

Before: Inconsistent verification, incomplete call logs

After 4 months: Consistent verification protocols, complete audit trails

Ongoing issue: Family emergency scenarios still require human judgment 60% of the time.

Reality check: These weren’t instant transformations. Both implementations required 6-8 weeks of configuration and staff training, plus ongoing refinements as edge cases emerged through healthcare AI security protocols.

Where AI Still Fails (And Always Will)

Complex Authorization Scenarios

Joint clients, family medical emergencies, opposing counsel requests—these situations require understanding context and relationships that current AI model security s simply cannot grasp.

Example: A caller claiming to be picking up medical records for their “elderly mother” who “can’t drive anymore.” AI anomaly detectioncan verify basic information, but it can’t assess whether this is legitimate family assistance or potential elder abuse.

Emergency Situations

When someone claims there’s a building emergency or medical crisis, you need human judgment to assess credibility through behavioral analytics AI. AI documentation helps, but decisions require human experience.

Real scenario: Someone called claiming the building was evacuating due to a gas leak and needed immediate access to employee emergency contacts. The AI security platforms properly escalated, but only a human could verify with building management that no evacuation was happening.

Evolving Social Engineering Threats

Attackers get more sophisticated over time. While predictive security analytics improves, experienced staff remain your best defense against novel manipulation attempts.

When evaluating different approaches to call security, comparing AI vs traditional IVR systems reveals why modern AI cybersecurity solutions provide superior protection against social engineering attacks.

Implementation Lessons (The Hard Way)

Week 1-2: Uncomfortable Discoveries

You’ll discover security gaps you didn’t know existed. Most businesses have informal procedures that work fine until you try to program them into AI security posture management systems.

Example: One law firm realized they had no standardized process for verifying family members could access a deceased client’s case information. It worked fine when everyone “knew the situation,” but proved impossible to automate.

Week 3-6: Configuration Reality

Every business is different. Generic configurations rarely work well. Expect several rounds of testing with real-world scenarios before everything clicks in your enterprise AI protection system.

Timeline Reality: Most successful implementations take 2-3 months to feel seamless, not the “operational in two weeks” that vendors promise.

Month 2+: Edge Cases Emerge

The system you launch isn’t your final system. Edge cases will emerge, staff will suggest improvements, and you’ll find better ways to handle situations through AI risk management.

Ongoing challenge: New regulations, staff changes, and business growth require continuous system updates for responsible AI implementation.

For deeper technical insights, machine learning call optimization explains how these systems prioritize security protocols.

The sophisticated technical foundation supporting these security measures relies on conversational AI architecture designed specifically for secure business communications.

Industry-Specific Implementation Realities

Healthcare: HIPAA Complexity

Patient verification protocols need careful design through healthcare AI security. Family authorization scenarios are particularly complex and require multiple configuration rounds.

Challenge: Mental health privacy laws add another layer of complexity that many generic AI compliance systems can’t handle properly.

Legal Services: Privilege Protection Nuances

Attorney-client privilege situations vary widely through legal AI compliance. Systems need sophisticated understanding of legal relationships and conflict protocols.

Reality: Joint representation and opposing counsel scenarios require human oversight 40% of the time, even after extensive configuration.

Financial Services: Fraud Prevention Integration

Account verification requirements change based on request types through financial AI security. Integration with existing fraud prevention systems is crucial but technically challenging.

Hidden cost: Most implementations require custom integration work that vendors don’t mention upfront for corporate communication security.

Many businesses also invest in AI receptionist voice customization to ensure their security voice systems sound professional and trustworthy, which can add 15-25% to implementation costs but significantly improves customer acceptance.

The Investment Reality (Including Hidden Costs)

| Implementation Type | Base Cost | Hidden Costs | Total Reality |

| Small practice setup | $5,000-12,000 | $2,000-4,000 customization | $7,000-16,000 |

| Industry compliance | $12,000-25,000 | Legal review costs | $15,000-32,000 |

| Enterprise integration | $25,000-60,000+ | Internal IT resources | $35,000-80,000+ |

| Annual optimization | 20-30% of base | Mandatory upgrades | 25-35% total |

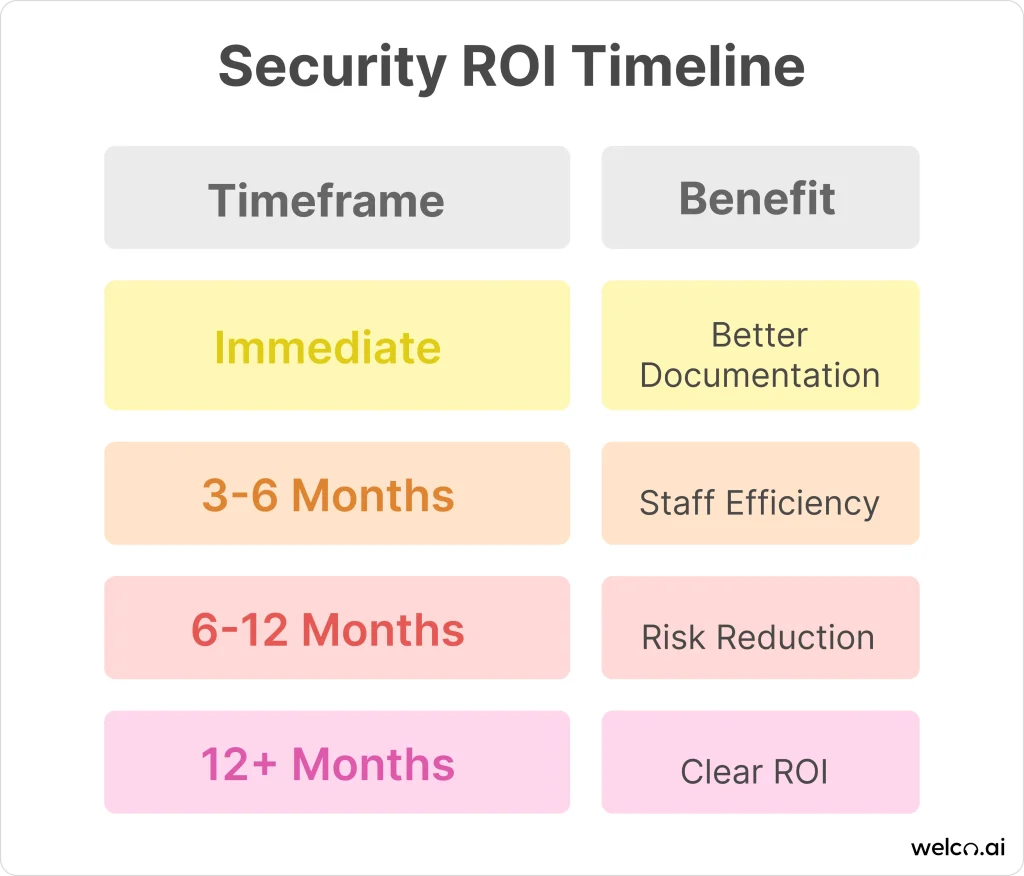

ROI Timeline (Realistic Expectations):

- Immediate: Better documentation and consistency through real-time threat monitoring

- 3-6 months: Staff efficiency on routine tasks

- 6-12 months: Measurable risk reduction through AI governance

- 12+ months: Clear ROI through avoided incidents

Truth: Most businesses see clear ROI within 8-12 months, primarily through risk reduction rather than dramatic cost savings from enterprise AI protection.

Vendor Evaluation: What Actually Matters

Red Flags That Predict Failure:

- Claims about preventing “all security threats”

- Reluctance to discuss system limitations in detail

- No industry-specific implementation examples with real references

- Vague explanations of how escalation actually works

- Promises of “operational in two weeks”

Good Signs of Realistic Vendors:

- Honest discussion of edge cases and ongoing limitations through AI risk management

- Industry references willing to discuss both successes and ongoing challenges

- Clear documentation of what the AI security solutions cannot do

- Realistic timelines that include configuration and training phases

- Detailed escalation procedures and failure scenarios for generative AI security

For comparison guidance, AI vs traditional IVR systems shows key differences in AI-powered security capabilities.

The Uncomfortable Truth

AI phone system security isn’t magic—it’s really good at consistently executing protocols and maintaining detailed records through intelligent security systems.

The businesses succeeding treat it as a significant operational improvement that requires ongoing investment, not a miracle solution. They spend time on proper configuration, train staff thoroughly, and maintain realistic expectations about AI model security.

The businesses failing treat it as a technology purchase rather than an operational change project in AI governance.

If you’re dealing with compliance requirements, security consistency challenges, or documentation gaps, AI cybersecurity reception systems have become a practical solution worth serious consideration.

But be honest about your current security gaps first. If you can’t clearly articulate what’s broken with your current system, adding AI security platforms won’t fix problems you haven’t identified.

The technology is ready. Are you prepared to invest in the operational changes, staff training, and ongoing refinement it requires for responsible AI? That’s the real question determining success or failure.

Start by auditing your current secure business communications protocols. Document what works, what fails, and where human error creates risk. Only then will you know if AI threat detection can actually solve your specific problems—or if you’re just buying expensive technology to fix problems you haven’t properly identified.

Don’t let another security incident catch you unprepared. The choice isn’t whether AI-powered security will improve your operations—it’s whether you’ll implement it properly with realistic expectations and comprehensive AI compliance planning.

Frequently Asked Questions

What if the AI becomes a security vulnerability itself?

Valid concern. AI systems should be isolated from core business systems with limited access to only essential verification data. If compromised, damage should be limited to call logs and basic information, not your entire infrastructure.

What happens during system downtime?

This is why backup procedures are mandatory. Most systems have 99%+ uptime, but you need staff trained on manual protocols. I include printed checklists, emergency contacts, and regular drills in every implementation.

What happens if the AI screws up and leaks confidential information?

This is the fear that keeps most business owners awake. In my experience, properly configured AI systems are actually more conservative than human staff—they escalate uncertain situations rather than guess. But configuration is key. Poor setup absolutely can lead to inappropriate information sharing.

How do we know if someone is trying to manipulate the AI?

AI systems are good at detecting inconsistencies in caller information, but sophisticated social engineering still requires human judgment. Think of AI as a very thorough first-line security guard who knows when to call for backup—but you still need experienced backup available.

How long before our staff trusts the system?

About 6-8 weeks in my experience, but only with proper training on limitations, not just capabilities. Staff who understand that AI is designed to escalate complex situations adapt much faster than those who expect it to handle everything.

Is this worth the cost given our current security works fine?

If your current security is already perfect and consistently documented, you probably don’t need this technology. The value comes from consistency and risk reduction. A single compliance violation can cost $50,000+ in healthcare or result in malpractice claims in legal services.

Our staff is resistant to technology—how do we handle that?

Start with the value proposition: AI handles tedious verification tasks, freeing staff for complex customer service. Focus training on when to trust versus override the system. Most resistance fades when staff realize it makes their jobs easier rather than replacing their judgment.