Your AI Assistant Just Cost You a Customer

They hung up the moment they realized they were talking to a robot—and now they’re probably calling your competitor. Sound familiar?

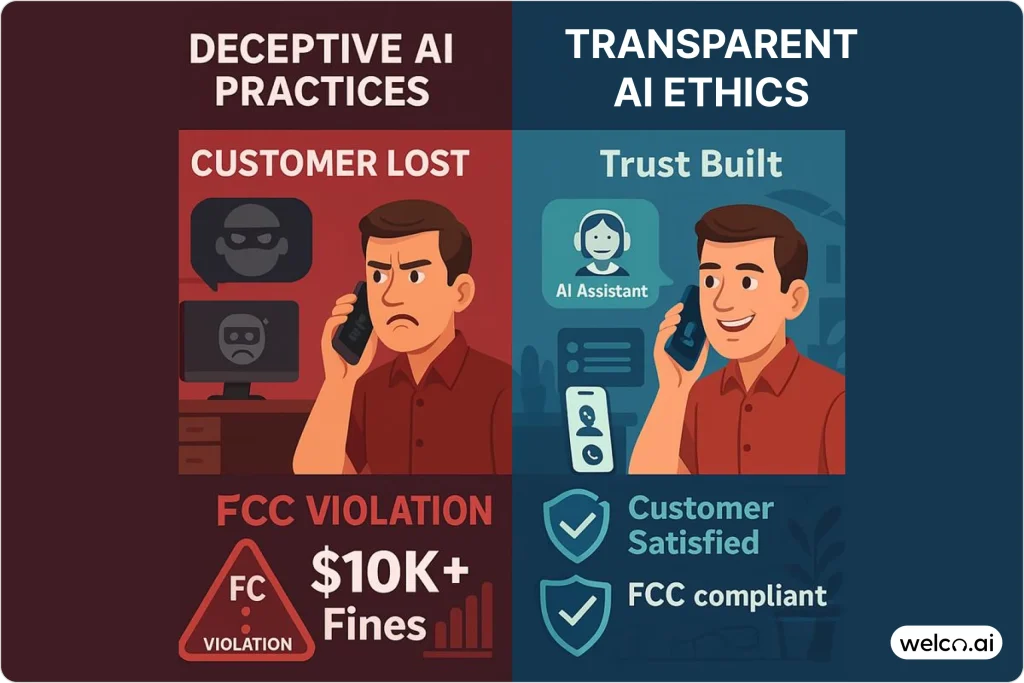

As businesses rush to integrate AI into customer interactions, many are discovering that deceptive AI practices backfire spectacularly. The customer who feels tricked by an undisclosed AI system becomes an angry former customer. But here’s the counterintuitive truth: transparent AI communication actually builds more trust than trying to fool people.

AI ethics in customer communication covers three critical areas: transparency, privacy protection, and fair treatment. Get these right, and you’ll not only avoid regulatory trouble—you’ll gain a competitive advantage. The Federal Communications Commission has recognized that AI-powered customer communication creates unique ethical challenges, with new rules requiring businesses to rethink how they handle AI interactions.

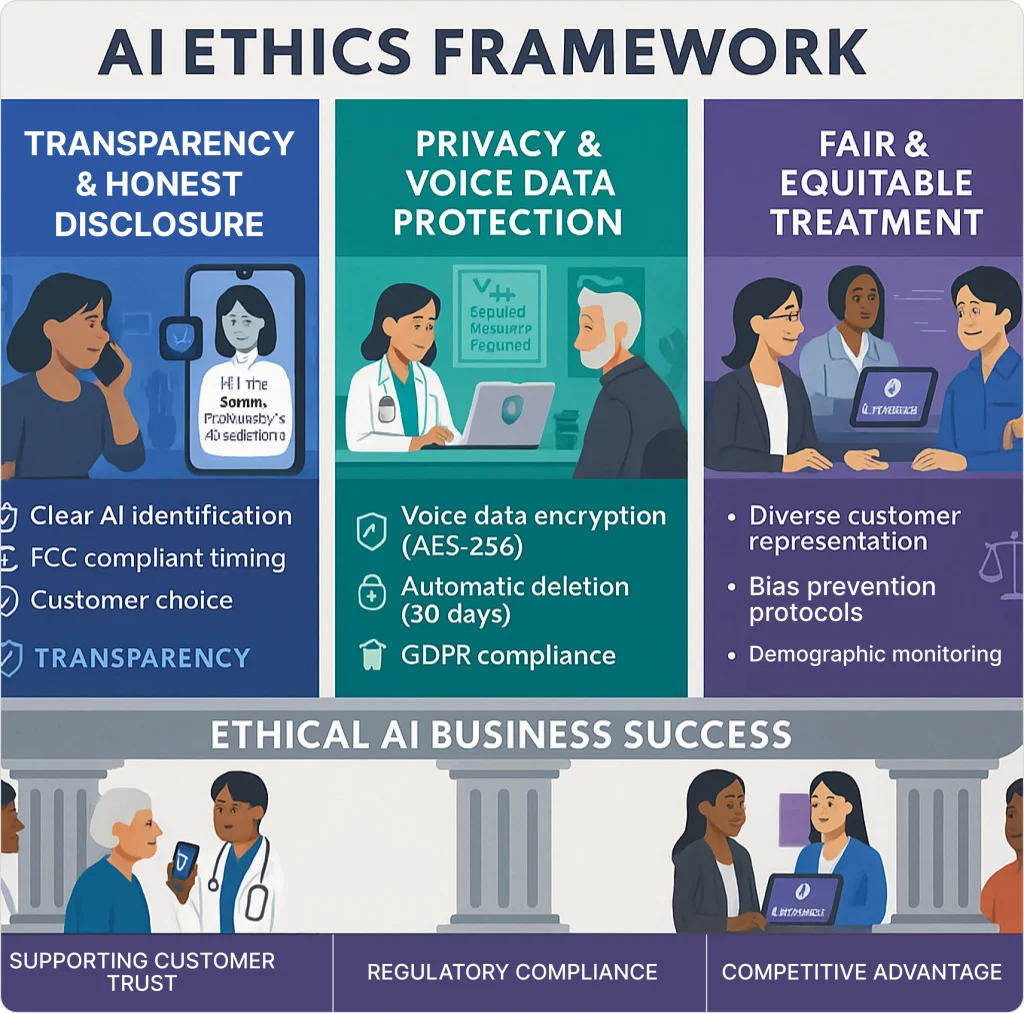

The Three Pillars of Ethical AI Customer Communication

Pillar 1: Transparency and Honest Disclosure

The Bottom Line: Tell customers they’re talking to AI—immediately and clearly.

Current FCC rules for AI require clear identification of AI systems, but ethical businesses go beyond minimum compliance. Here’s what works:

✓ Good Disclosure Script: “Hi! This is Sarah, [Company]’s AI assistant. I can help with most questions or connect you to a human rep right away. What can I help with?”

✗ Bad Disclosure: “Hello, this is Sarah from [Company]. How can I assist you today?” (No AI mention)

Studies show transparent AI interactions actually increase customer satisfaction compared to deceptive interactions. As emerging voice AI technology trends advance, this transparency becomes even more critical.

Why transparency works:

- Builds trust through honest interaction

- Lets customers choose their preferred communication style

- Reduces frustration when AI hits limitations

- Meets current FCC disclosure guidelines

Pillar 2: Respecting Customer Privacy and Voice Data

The Reality Check: Voice data reveals more than you think—age, health status, emotional state, even socioeconomic background.

Imagine discovering your competitor has been analyzing your customers’ voice patterns to poach them with targeted ads. That’s the risk of poor voice data protection. Voice data is now treated like fingerprints—it needs serious protection. Think of it as biometric information, not just sound waves.

Essential Practices:

- Get explicit consent before analyzing voice patterns beyond basic service

- Use AES-256 encryption for voice storage and transmission

- Delete voice data automatically when it’s no longer needed

- Offer clear opt-out mechanisms for voice analysis

- Follow GDPR guidelines for EU customers (even if you’re US-based)

Real Example: Sarah’s Pet Clinic handles it right. When customers call for appointment scheduling, their AI assistant processes the request, books the appointment, then automatically deletes the voice recording within 30 days. No analysis of voice patterns for marketing, no indefinite storage—just ethical service that respects customer privacy.

Pillar 3: Ensuring Fair and Equitable Treatment

The Challenge: AI can struggle with accents, make assumptions based on voice characteristics, or provide different service quality to different demographic groups.

Picture this: Your AI works perfectly for customers with standard American accents but struggles with anyone who sounds “different.” Meanwhile, your competitor’s system treats everyone fairly. Guess where those frustrated customers are headed?

Common Problems:

- AI assumes older voices need simplified language

- Accent recognition fails for non-native speakers

- System escalates certain demographic groups faster than others

- Cultural communication styles aren’t properly understood

Solutions That Work:

- Test your AI monthly with diverse employee volunteers

- Monitor escalation rates by demographic (red flag if one group escalates 40% more)

- Train on diverse datasets—not just standard American English

- Set up human escalation when AI confidence drops below 80%

As businesses explore human-AI collaboration models, focus on enhancing rather than replacing human empathy.

Industry-Specific Considerations

Healthcare: Extra Protection Required

Healthcare AI faces HIPAA compliance requirements beyond general business rules. Patient data demands maximum protection.

Healthcare Must-Dos:

- Business Associate Agreements with any AI vendor handling patient data

- Patient consent specifically for AI voice interactions

- Emergency escalation that prioritizes patient safety over efficiency

- Zero tolerance for AI making medical recommendations

Cautionary Tale: Dr. Martinez’s four-location pediatric practice in Austin learned this the hard way. After implementing AI without proper HIPAA safeguards, they faced a compliance audit that cost $15,000 in legal fees alone. Now they’re a model of ethical AI implementation in healthcare.

Financial Services & General Business

Financial institutions must consider Fair Credit Reporting Act requirements and Equal Credit Opportunity Act provisions.

All businesses face FTC regulations on deceptive practices, state consumer protection laws, and ADA accessibility requirements.

The evolution of business reception in various industries means balancing efficiency with the personal touch customers expect.

Building Your AI Ethics Framework

Start With Your AI Ethics Policy

Don’t overthink this—start simple and build up.

Essential Components:

- Disclosure requirements: Script templates and timing requirements

- Privacy standards: What voice data you collect, how long you keep it

- Bias prevention: Testing schedules and escalation triggers

- Customer rights: Easy human escalation and complaint procedures

- Staff training: Monthly sessions covering ethics principles

- Incident response: What to do when something goes wrong

Monitoring That Actually Works

Skip the complex dashboards—focus on metrics that matter:

- Customer satisfaction scores for AI vs. human interactions

- Escalation rates by demographic (monthly review)

- Complaint themes (quarterly analysis)

- AI confidence scores (flag interactions below 80%)

Modern voice search and business discovery capabilities can enhance customer experience while maintaining ethical standards.

Training Your Team

Essential Monthly Training Topics:

- How to handle “I want to talk to a human” requests

- Recognizing when AI is struggling with specific customers

- Privacy protection basics for customer data

- Understanding predictive service capabilities and appropriate use

The Business Case: Why Ethics Pay Off

Ethical AI isn’t just about avoiding penalties—it’s smart business.

Measurable Benefits:

- Higher customer satisfaction with transparent AI interactions

- Increased customer retention due to trust-building

- Reduced complaint volume and negative reviews

- Competitive differentiation in crowded markets

Real Impact Example: TechStart Solutions, a software company in Denver, switched from hidden AI to transparent AI customer service. Result: 31% fewer complaints and 18% higher customer satisfaction scores within six months. Their secret? Customers appreciated knowing exactly what they were getting.

Forward-thinking businesses implement omnichannel integration strategies maintaining ethical standards across all touchpoints, while exploring future workplace virtual assistance models prioritizing human oversight.

Getting Started: Your 30-Day Action Plan

Week 1: Assessment

- Call your own business line—does AI clearly identify itself?

- Review current voice data storage and deletion policies

- Test AI with employees having different accents/speaking styles

Week 2: Quick Fixes

- Update AI disclosure scripts (use template above)

- Implement automatic voice data deletion (30-90 days max)

- Create simple human escalation process

Week 3: Documentation

- Draft basic AI ethics policy

- Set up bias monitoring spreadsheet

- Schedule monthly team training

Week 4: Testing & Refinement

- Test updated system with diverse group

- Collect initial feedback

- Make necessary adjustments

Download Template: [30-Day AI Ethics Implementation Checklist]

Quick Self-Assessment

Ask Yourself:

- Do customers always know they’re talking to AI?

- Can customers easily reach a human when needed?

- Are we protecting voice data like sensitive biometric information?

- Does our AI treat all customers fairly regardless of how they sound?

- Do we have a plan when AI encounters limitations?

Your Risk Level:

- 0-2 boxes checked: High risk—start implementing changes immediately

- 3-4 boxes checked: Good foundation—focus on filling the gaps

- All 5 boxes checked: Excellent—maintain current practices and monitor regularly

If you answered “no” or “unsure” to any question, start there.

Frequently Asked Questions

How do I test if my AI system meets FCC guidelines?

Call your business line and time how long it takes for AI to identify itself. If it’s not within the first 10 seconds with clear language, you’re likely non-compliant. Document this test for compliance records.

What happens if a customer complains to regulators?

Customer complaints can trigger FCC investigations. The process includes reviewing your disclosure practices, voice data handling, and compliance documentation. Proper policies reduce penalties significantly—most first violations get warning letters, but repeated violations can result in substantial fines.

Do I need separate consent for AI voice interactions?

Yes, especially under GDPR and state privacy laws. Voice data is biometric information requiring explicit consent. Best practice: obtain specific consent at interaction start, explaining what voice data you collect and how long you keep it.

How can I detect AI bias in my system?

Test monthly with diverse employees or volunteers. Monitor escalation rates by demographic—if one group escalates 30%+ more than others, investigate immediately. Look for patterns in customer satisfaction scores and complaint types across different groups.

What are realistic compliance costs?

Internal handling requires 10-15 hours monthly for monitoring and updates, plus initial setup costs for legal review and implementation. Many businesses find automated compliance platforms more cost-effective long-term. Compare costs against potential penalties and customer trust issues.