Why Trust in AI matters more than ever

Picture this: Your AI chatbot just gave a customer completely wrong information, your recommendation engine is showing bizarre suggestions, and your biggest client is questioning your “smart” technology.

Sound familiar? It is where knowing how to build customer trust in AI can transform these digital disasters into competitive advantages that actually increase customer loyalty.

What is building customer trust in AI? Building customer trust in AI means creating transparent systems that clearly explain decisions, protect data privacy, and maintain accountability when mistakes occur. This process doesn’t have to feel like fighting a tough battle.

Whether you are deploying chatbots or recommendation systems, the right approach turns AI skepticism into customer confidence.

Transparent AI communication reduces complaint escalation by 65%. But here’s the challenge: only 33% of consumers trust companies with AI-collected data in 2024. The trust gap keeps growing despite AI’s proven benefits.

If you want to build long-lasting customer relations, you need a well-designed, transparent AI-based communication system.

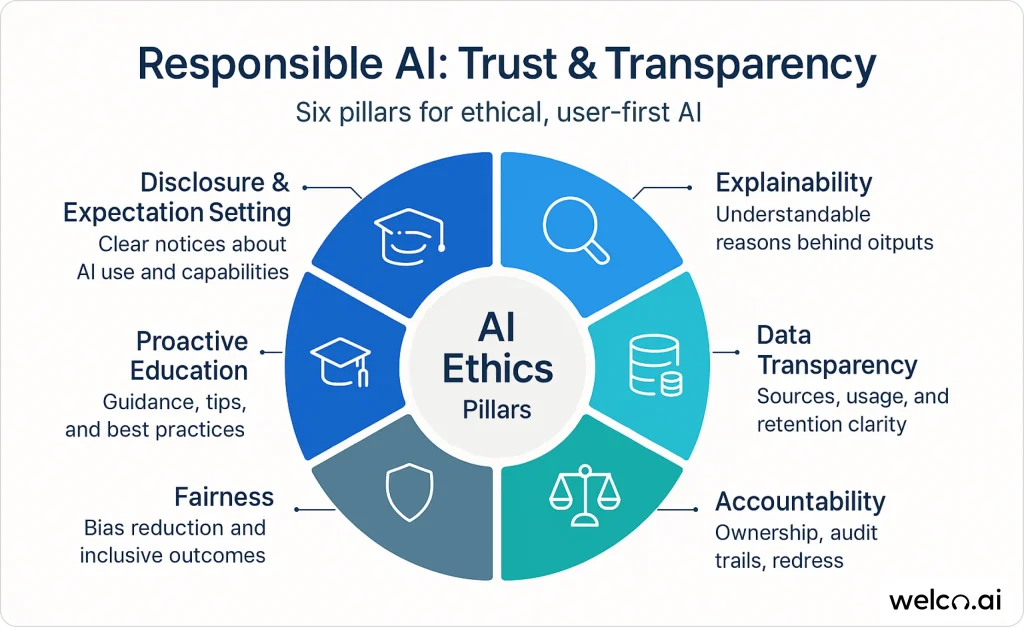

What are the Core Principles of Transparent AI Communication

The six core principles of transparent AI communication are: disclosure and expectation setting, explainability, data transparency, accountability, fairness, and proactive education.

Disclosure and setting expectations

How do you disclose AI to customers? Start conversations with clear statements like “Hi, I’m your AI-powered assistant” and proactively communicate limitations when requests exceed capabilities.

Clear upfront communication prevents misunderstandings while building immediate credibility with customers. When customers interact with AI systems, they need explicit information about what they’re engaging with. Leading companies now start chatbot conversations with phrases like “Hi, I’m your AI-powered virtual assistant here to help!” This immediately sets proper expectations.

Limitation disclosure proves equally important. Smart AI systems proactively communicate their boundaries. For example, when a request exceeds capability, effective systems respond: “I can’t handle that specific request, but I can connect you with a human agent who specializes in this area.”

Seamless escalation pathways complete the disclosure framework. Customers should always know how to reach human support when needed.

Examples in customer service and chatbots

Financial services lead in disclosure best practices. Banks now clearly label AI-powered fraud detection alerts, explaining: “Our AI system flagged this transaction because it’s higher than your average spending and occurred in a different location.”

Healthcare AI applications take disclosure further. Modern diagnosis support tools explicitly state: “This AI analysis supports your doctor’s decision-making but doesn’t replace medical expertise.” This approach builds confidence while managing expectations.

Customer service chatbots increasingly use visual indicators, like robot icons or “AI” labels, to maintain transparency throughout conversations.

Explainability & interpretability

What is AI explainability? AI explainability means translating complex algorithmic decisions into understandable language, like “We recommend this product based on your purchase history and saved favorites.

Making AI decisions understandable transforms complex systems into trusted tools that customers readily adopt. The key lies in translating complex algorithmic processes into language anyone can understand.

When an AI recommends products, effective systems explain: “We suggest this based on your purchase history and items you’ve saved as favorites.”

Context matters enormously in explanations. High-stakes decisions require more detailed justification than casual recommendations. Loan applications might include explanations like: “Your application was approved based on income verification, credit history, and debt-to-income ratio.”

Modern explainability goes beyond simple text. Visual representations help customers understand AI logic through decision trees, confidence scores, and factor weightings.

Visualizing AI decisions

Dashboard design plays a crucial role in AI transparency. Effective interfaces show decision pathways through flowcharts, highlight key factors with color coding, and provide confidence percentages for AI recommendations.

Interactive elements enhance understanding. Customers can click “Why was this recommended?” to see detailed reasoning. Some systems let users adjust preference settings and immediately see how changes affect AI suggestions.

Real-time transparency indicators, like “AI is analyzing your request” messages, keep customers informed about system processes.

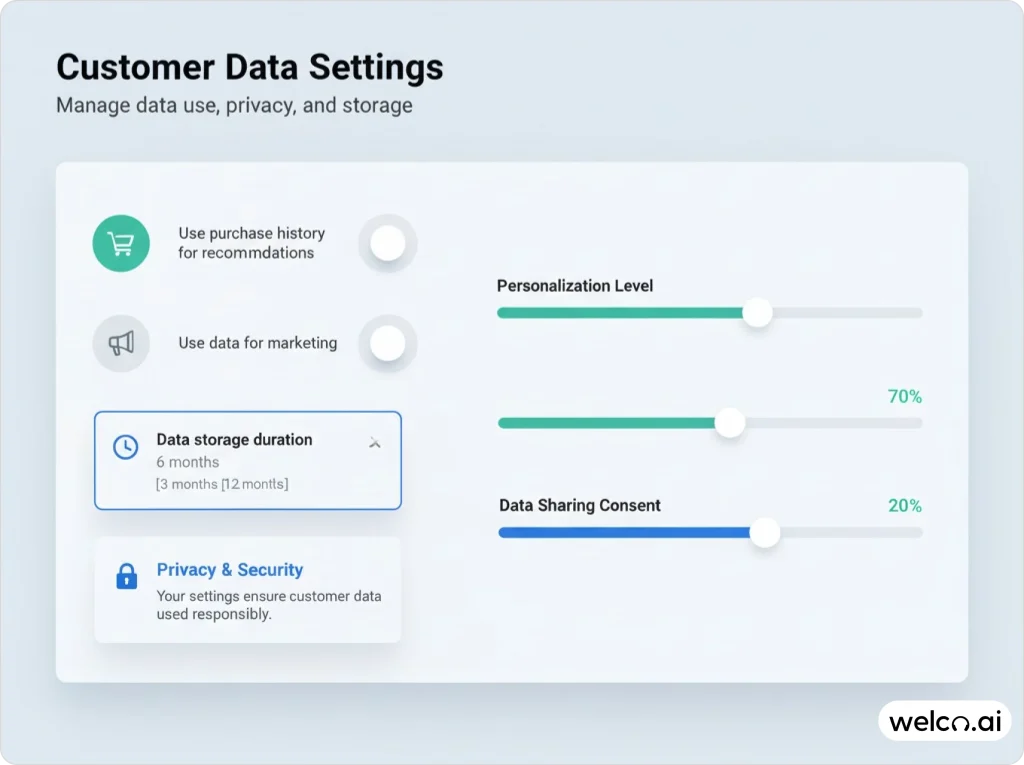

Data usage transparency & user control

How do you communicate AI data practices? Use plain language to explain what data you collect, how long you store it, and who can access it, with granular consent options for different uses.

Complete transparency about data practices empowers users and ensures compliance with evolving privacy regulations worldwide.

Customers deserve clear information about data collection, storage, and usage. Leading companies detail exactly what information they gather, how long they keep it, and who has access. Privacy policies increasingly use plain language instead of legal jargon.

Explicit consent mechanisms go beyond simple checkboxes. Modern approaches explain each data usage type separately, allowing granular control. Users can consent to personalization while declining marketing uses of their information.

User empowerment through control options builds lasting trust. Customers appreciate settings that let them adjust AI behavior, delete stored data, or opt out of specific features while maintaining core functionality.

Communicating data practices

Privacy-by-design principles now guide how companies present data practices. Instead of buried policies, forward-thinking organizations showcase privacy protections prominently in user interfaces.

Sample language evolution shows this shift. Old approaches: “We may use your data for various purposes.” New transparency: “We use your purchase history to suggest relevant products. You can disable this feature anytime.”

Customer empowerment features increasingly include data dashboards where users can see exactly what information is stored, how it’s being used, and options to modify or delete specific data points.

Accountability and error handling

How do you handle AI errors? Acknowledge mistakes immediately, explain correction steps, and provide clear paths to human support with responses like “I apologize for the confusion and have connected you with a specialist.”

When AI makes mistakes, clear accountability processes maintain trust and demonstrate responsibility for system outcomes.

Human oversight remains essential for AI accountability. Customers need assurance that real people monitor AI systems and can intervene when problems arise. Clear escalation paths let users quickly reach human agents for complex issues.

Error acknowledgment and correction build credibility. When AI chatbots make mistakes, effective responses include: “I apologize for the confusion. I’ve noted this error in our system and connected you with a specialist who can resolve your specific situation.”

Continuous improvement communication shows customers that companies learn from mistakes. Regular updates about system improvements demonstrate an ongoing commitment to better service.

Building redress mechanisms

Customer recourse pathways must be clearly defined and easily accessible. This includes complaint processes, error correction procedures, and compensation policies when AI systems cause problems.

Case examples demonstrate effective redress. When AI incorrectly flags legitimate transactions as fraudulent, leading banks now provide immediate provisional credit while human review proceeds. This approach prioritizes customer convenience while maintaining security.

Feedback loops enable system refinement. Companies increasingly show customers how their feedback directly improves AI performance, creating collaborative improvement relationships.

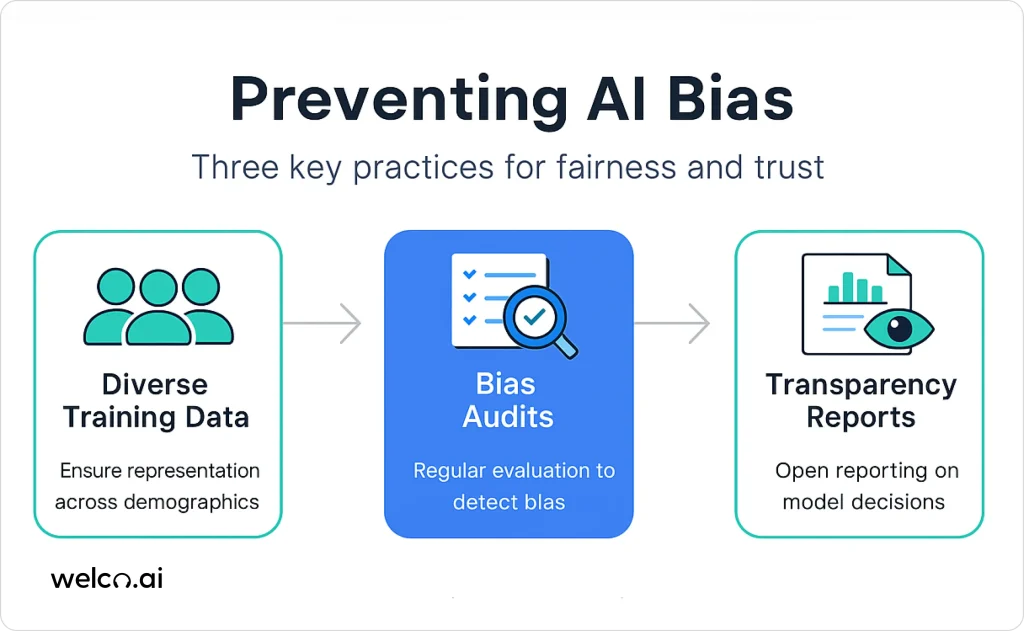

Fairness and bias mitigation

How do you prevent AI bias? Use diverse training datasets, conduct regular bias audits across demographic groups, and publish transparency reports about fairness testing results.

Proactive bias prevention demonstrates commitment to treating all customers equitably, regardless of background or demographics.

Inclusive dataset requirements form the foundation of fair AI systems. Companies must ensure training data represents diverse customer populations to prevent discriminatory outcomes. Regular auditing helps identify and correct biased patterns before they impact customers.

Bias auditing processes increasingly include external validation. Independent reviews provide a credible assessment of AI fairness, especially important for sensitive applications like lending or hiring.

Public disclosure of fairness initiatives builds credibility. Leading organizations publish diversity reports for their AI training data and share results of bias testing.

Bias auditing in sensitive industries

Financial services face particular scrutiny for AI fairness. Banks now conduct regular bias audits across demographic groups, testing whether loan approval algorithms treat all qualified applicants equally, regardless of protected characteristics.

Healthcare applications require even more rigorous bias prevention. AI diagnosis tools undergo extensive testing to ensure accuracy across different patient populations, preventing disparities in medical care.

Regulated industries increasingly adopt compliance checklists that include bias testing requirements, documentation standards, and remediation procedures when bias is detected.

Proactive communication & customer education

Educational approaches prevent misinformation and build confidence in AI capabilities while addressing common customer concerns.

Customer education strategies help bridge knowledge gaps that fuel AI distrust. Companies increasingly provide resources explaining how their AI works, what it can and can’t do, and how customers benefit from the technology.

FAQ optimization addresses specific transparency concerns. Instead of generic AI information, effective FAQs answer questions like “How do you protect my data?” and “Can I opt out of AI features?”

Community engagement through social media and forums creates ongoing dialogue about AI practices. This two-way communication helps companies understand customer concerns while educating about AI benefits.

Tools for building AI literacy

Interactive tutorials let customers explore AI features safely. Demo environments show how AI makes recommendations without affecting real accounts or data.

Educational prompts within interfaces explain AI functions contextually. When customers first encounter AI features, helpful tooltips explain what’s happening and why it’s beneficial.

Community engagement approaches include webinars, Q&A sessions, and customer advisory panels that provide input on AI development priorities and transparency improvements.

How do you design trustworthy AI interfaces?

Trustworthy AI design combines visual trust indicators, clear escalation options, and feedback mechanisms that make AI interactions feel safe and predictable.

Building trust through design empathy

How do you design AI interfaces that build trust? Use friendly visual cues, conversational language that acknowledges limitations, and clear security indicators with prominent human escalation options.

Human-centric interface principles recognize that AI interactions should feel natural and comfortable. Visual cues like friendly colors and clear typography reduce anxiety about AI systems.

Emotional tone in AI communications affects trust significantly. Conversational language that acknowledges limitations builds more credibility than overselling AI capabilities. Phrases like “I’ll do my best to help” create appropriate expectations.

Trust indicators in interfaces include security badges, privacy confirmations, and clear labeling of AI vs. human interactions. These visual elements reassure customers about system reliability.

Human in the loop: seamless escalation

Fallback processes ensure customers never feel trapped in AI systems. Clear “speak to human” options should be prominent and immediately accessible throughout AI interactions.

Urgency triggers automatically escalate sensitive situations to human agents. Keywords indicating frustration, legal issues, or emergencies prompt immediate human intervention.

Compliance-first designs ensure AI systems meet regulatory requirements while maintaining user-friendly interfaces. This balance prevents legal issues while preserving a positive customer experience.

Feedback loops and continuous improvement

How do you improve AI transparency over time? Collect user feedback through ratings and comments, communicate improvements regularly, and invite customers to participate in beta testing programs.

User feedback collection mechanisms built into AI interfaces enable ongoing system refinement. Simple thumbs-up/down ratings and comment boxes gather valuable improvement data.

Transparency about improvements shows customers how their feedback directly enhances AI performance. Regular updates communicate system enhancements and new features based on customer input.

Collaborative enhancement approaches invite customers to participate in AI development through beta testing programs and feature suggestion systems.

What are Common Trust Barriers in AI Adoption and How to Overcome Them?

The main barriers are data misuse fears (68% of consumers), algorithmic opacity concerns, and regulatory compliance worries.

Common pain points

Data collection concerns remain high among consumers. A KPMG survey shows that 68% of U.S. citizens are concerned about the volume of data being collected by businesses.

Algorithmic opacity concerns persist because many customers lack an understanding of how AI makes decisions. Technical explanations often fail to address emotional concerns about losing human control.

Regulatory scrutiny creates additional anxiety. Customers worry about compliance failures and data breaches, requiring proactive communication for security measures and regulatory adherence.

Practical solutions for customers

How do you overcome AI trust barriers? Start with low-risk transparency pilots, involve customers in development decisions, and run educational campaigns that address emotional concerns.

Transparency pilots allow gradual AI introduction with extensive explanation and support. Starting with low-risk applications builds confidence before expanding to more sensitive use cases.

Co-creation approaches invite customers to participate in AI development decisions. This involvement increases buy-in and addresses specific trust concerns through direct collaboration.

Educational campaigns must address emotional as well as rational concerns. Success requires acknowledging fears while providing concrete evidence of AI benefits and safety measures.

Welco’s Approach to Transparent AI

Welco’s comprehensive transparency framework sets the industry standard for trustworthy AI implementation.

Welco’s trust-centered design principles

Welco’s brand philosophy prioritizes transparency at every stage of AI development and deployment. Our approach differs from competitors by building transparency into system architecture rather than adding it later.

Specific transparency tools include real-time explanation dashboards, granular privacy controls, and proactive bias monitoring systems. These features provide customers with unprecedented visibility into AI operations.

Disclosure practices at Welco.ai exceed industry standards. We provide detailed algorithm information, data usage specifications, and performance metrics that competitors typically keep proprietary.

Industry Comparison: How Welco Stands Out

Welco’s approach differs fundamentally from Zendesk’s basic disclosure requirements and Salesforce’s reactive transparency measures. We embed transparency proactively throughout the customer journey.

Unique transparency features include predictive explanation systems that anticipate customer questions, multi-modal explanation formats, and continuous transparency monitoring that adapts to changing customer needs.

Market positioning advantages stem from our transparency-first philosophy. While competitors add transparency features to existing systems, Welco builds transparency into core architecture from inception.

Looking Ahead: The Future of Trustworthy AI

Preparing for regulatory changes and evolving expectations ensures transparency strategies remain effective as AI continues advancing.

Regulatory trends and ethical standards

What AI regulations are coming in 2025? Expected regulations include mandatory disclosure requirements, algorithmic auditing standards, and enhanced data protection measures following the EU AI Act model.

Anticipated regulations include mandatory AI disclosure requirements, algorithmic auditing standards, and enhanced data protection measures. The EU AI Act sets precedents that other jurisdictions will likely adopt.

Ethics-by-design frameworks are becoming regulatory requirements rather than voluntary best practices. Companies must embed ethical considerations throughout AI development processes.

Collaborative stakeholder approaches increasingly include customer input in AI governance decisions. This trend toward participatory AI development will continue expanding.

Final Takeaways

Embedding transparency requires a systematic approach across technology, processes, and culture. Success demands commitment from leadership and investment in appropriate tools and training.

Improving AI literacy benefits everyone. Companies should invest in customer education programs that build understanding and confidence in AI technologies.

Next steps include conducting transparency audits, implementing customer feedback systems, and developing comprehensive AI communication strategies. The time for reactive approaches has passed; proactive transparency is now essential for competitive success.

The future belongs to organizations that view transparency not as a compliance requirement but as a competitive advantage in building lasting customer relationships.

Frequently Asked Questions

Why is transparent communication critical for AI adoption?

Transparent communication builds customer trust by making AI systems understandable, explainable, and fair, helping users feel confident in how decisions are made and how their data is used.

How should companies disclose the use of AI in customer interactions?

Companies should, in clear terms, announce when customers are interacting with AI (e.g., “Hi, I’m an AI-powered assistant”) and openly explain the limitations and boundaries of those systems, which prevents misunderstandings and builds credibility.

What role does explainability play in customer trust?

Explainability means companies must translate AI decisions into plain language, use visual aids (like dashboards) to clarify reasoning, and offer customers options to review or challenge decisions, thereby increasing understanding and trust.

How can businesses ensure fairness and accountability in AI systems?

Businesses should conduct regular bias audits with diverse data, provide clear redress mechanisms for errors, and keep customers informed about privacy, data practices, and system improvements—ensuring errors are acknowledged and corrected transparently